Docker Compose

啟動服務

docker-compose upRemote

docker -H=remote-docker-engine:2375

docker -H=10.123.2.1:2375 run nginx版本演進

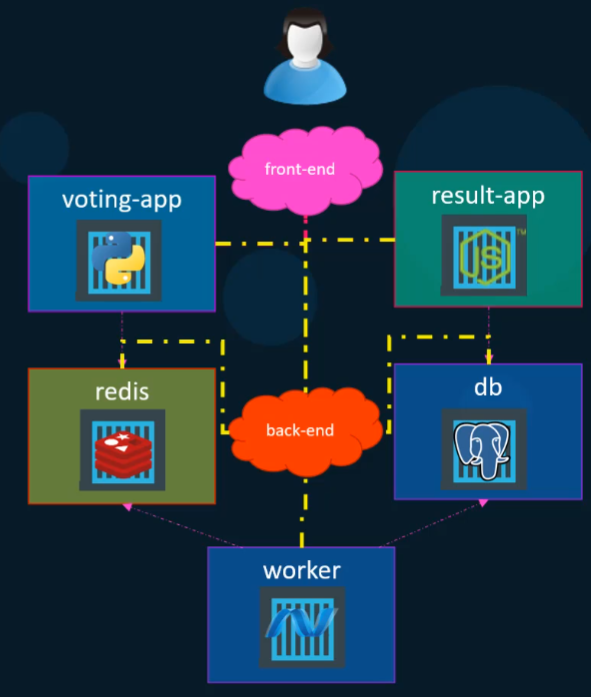

Version 1

使用 links 連接容器:

redis:

image: redis

db:

image: postgres:9.4

vote:

image: voting-app

ports:

- 5000:80

links:

- redis

result:

image: result-app

ports:

- 5001:80

links:

- db

worker:

image: worker

links:

- redis

- dbVersion 2

引入 services 和網路概念:

version: 2

services:

redis:

image: redis

networks:

- back-end

db:

image: postgres:9.4

networks:

- back-end

vote:

image: voting-app

ports:

- 5000:80

depends_on:

- redis

networks:

- front-end

- back-end

result:

image: result

networks:

- front-end

- back-end

networks:

front-end:

back-end:Version 3

簡化配置結構:

version: 3

services:

redis:

image: redis

db:

image: postgres:9.4

vote:

image: voting-app

ports:

- 5000:80Deploy

傳統部署方式:

docker run user/simple-webapp

docker run mongodb

docker run redis:alpine

docker run ansibleDocker Compose 部署方式:

services:

web:

image: "user/simple-webapp"

database:

image: "mongodb"

messaging:

image: "redis:alpine"

orchestration:

image: "ansible"Old Way Container Connect

docker run -d --name=redis user/simple-webapp

docker run -d --name=db postgres

docker run -d --name=vote -p 5000:80 --link redis:redis voting-app

docker run -d --name=result -p 5001:80 --link db:db result-app

docker run -d --name=worker --link redis:redis --link db:db --link redis:redis workerCompose

redis:

image: redis

db:

image: postgres:9.4

vote:

build: ./vote

ports:

- 5000:80

links:

- redis

result:

build: ./result

ports:

- 5001:80

links:

- db

worker:

build: ./worker

links:

- redis

- db

Commands

啟動與停止

# 啟動所有服務

docker-compose up -d

# 啟動特定服務

docker-compose up -d service_name

# 停止所有服務

docker-compose down

# 停止特定服務 (保持其他服務運行)

docker-compose stop service_name

# 啟動特定服務

docker-compose start service_name

# 重啟特定服務

docker-compose restart service_name

# 暫停/恢復服務

docker-compose pause service_name

docker-compose unpause service_name容器管理

# 移除停止的容器

docker-compose rm service_name

# 強制移除容器 (即使在運行)

docker-compose rm -f service_name

# 重建並重啟特定服務

docker-compose up -d --build service_name

# 強制重建容器 (無快取)

docker-compose up -d --force-recreate service_name

# 移除所有服務並清理 volumes

docker-compose down -v

# 移除所有服務、volumes 和 images

docker-compose down -v --rmi allBuild & Image Management

# 構建所有服務

docker-compose build

# 構建特定服務

docker-compose build service_name

# 強制重建 (無快取)

docker-compose build --no-cache

# 拉取映像

docker-compose pull

# 拉取特定服務映像

docker-compose pull service_name

# 推送映像

docker-compose push service_nameScaling & Resource Management

# 擴展服務實例數量

docker-compose up -d --scale web=3 --scale worker=2

# 查看服務狀態

docker-compose ps

# 查看特定服務狀態

docker-compose ps service_name

# 查看資源使用情況

docker-compose top

# 查看容器統計

docker stats $(docker-compose ps -q)Logs & Debugging

# 查看所有服務日誌

docker-compose logs

# 查看特定服務日誌

docker-compose logs service_name

# 即時追蹤日誌

docker-compose logs -f service_name

# 查看最近 100 行日誌

docker-compose logs --tail=100 service_name

# 查看帶時間戳的日誌

docker-compose logs -t service_nameContainer Interaction

# 在運行的容器中執行指令

docker-compose exec service_name bash

# 執行一次性指令

docker-compose run service_name python manage.py migrate

# 執行一次性指令 (不創建依賴服務)

docker-compose run --no-deps service_name npm test

# 在後台執行一次性指令

docker-compose run -d service_name python worker.pyConfiguration & Validation

# 驗證配置檔案語法

docker-compose config

# 查看最終配置 (變數替換後)

docker-compose config --services

# 列出所有服務名稱

docker-compose config --services

# 使用不同的 compose 檔案

docker-compose -f docker-compose.yml -f docker-compose.prod.yml config

# 設定環境變數檔案

docker-compose --env-file .env.prod up -dEnvironment Management

# 開發環境

docker-compose up -d

# 生產環境

docker-compose -f docker-compose.yml -f docker-compose.prod.yml up -d

# 測試環境

docker-compose -f docker-compose.test.yml up -d --abort-on-container-exit

# 清理開發環境

docker-compose down -v --remove-orphans

docker system prune -fHealth Check & Monitoring

# 檢查不健康的服務

docker-compose ps --filter "health=unhealthy"

# 等待服務健康後再執行

docker-compose up -d

docker-compose exec service_name wait-for-it.sh db:5432 -- echo "DB is ready"

# 查看容器內部網路

docker-compose exec service_name ip addr show

# 檢查端口連通性

docker-compose exec service_name telnet redis 6379Demo

version: '3.8'

services:

# Frontend - React + Vite Development Server

frontend:

build:

context: ./frontend

dockerfile: Dockerfile.dev

ports:

- "3000:3000"

volumes:

- ./frontend:/app

- /app/node_modules

environment:

- VITE_API_URL=http://localhost:8080

- VITE_PYTHON_API_URL=http://localhost:8001

- VITE_NODE_API_URL=http://localhost:8002

- VITE_TRACING_ENDPOINT=http://tempo:3200

depends_on:

- go-server

- python-server

- nodejs-server

networks:

- app-network

restart: unless-stopped

logging:

driver: "json-file"

options:

max-size: "10m"

max-file: "3"

labels: "service=frontend,environment=development"

# Go Backend Server with OpenTelemetry

go-server:

build:

context: ./go-server

dockerfile: Dockerfile

ports:

- "8080:8080"

environment:

- GIN_MODE=debug

- REDIS_URL=redis:6379

- REDIS_PASSWORD=redis123

- DB_HOST=postgres

- DB_PORT=5432

- DB_NAME=goapp

- DB_USER=postgres

- DB_PASSWORD=password123

- OTEL_EXPORTER_OTLP_ENDPOINT=http://tempo:4317

- OTEL_RESOURCE_ATTRIBUTES=service.name=go-server,service.version=1.0.0

- JAEGER_ENDPOINT=http://tempo:14268/api/traces

volumes:

- ./go-server:/app

depends_on:

- redis

- postgres

- tempo

networks:

- app-network

restart: unless-stopped

healthcheck:

test: ["CMD", "curl", "-f", "http://localhost:8080/health"]

interval: 30s

timeout: 10s

retries: 3

logging:

driver: "json-file"

options:

max-size: "10m"

max-file: "3"

labels: "service=go-server,environment=development"

# Python FastAPI Server with OpenTelemetry

python-server:

build:

context: ./python-server

dockerfile: Dockerfile

ports:

- "8001:8001"

environment:

- PYTHONPATH=/app

- REDIS_URL=redis://redis:6379

- REDIS_PASSWORD=redis123

- DATABASE_URL=postgresql://postgres:password123@postgres:5432/pythonapp

- OTEL_EXPORTER_OTLP_ENDPOINT=http://tempo:4317

- OTEL_RESOURCE_ATTRIBUTES=service.name=python-server,service.version=1.0.0

- OTEL_PYTHON_LOGGING_AUTO_INSTRUMENTATION_ENABLED=true

volumes:

- ./python-server:/app

depends_on:

- redis

- postgres

- tempo

networks:

- app-network

restart: unless-stopped

healthcheck:

test: ["CMD", "curl", "-f", "http://localhost:8001/docs"]

interval: 30s

timeout: 10s

retries: 3

logging:

driver: "json-file"

options:

max-size: "10m"

max-file: "3"

labels: "service=python-server,environment=development"

# Node.js Express Server with OpenTelemetry

nodejs-server:

build:

context: ./nodejs-server

dockerfile: Dockerfile

ports:

- "8002:8002"

environment:

- NODE_ENV=development

- REDIS_URL=redis://redis:6379

- REDIS_PASSWORD=redis123

- DATABASE_URL=postgresql://postgres:password123@postgres:5432/nodeapp

- PORT=8002

- OTEL_EXPORTER_OTLP_ENDPOINT=http://tempo:4317

- OTEL_RESOURCE_ATTRIBUTES=service.name=nodejs-server,service.version=1.0.0

- OTEL_NODE_ENABLED_INSTRUMENTATIONS=http,express,redis,pg

volumes:

- ./nodejs-server:/app

- /app/node_modules

depends_on:

- redis

- postgres

- tempo

networks:

- app-network

restart: unless-stopped

healthcheck:

test: ["CMD", "curl", "-f", "http://localhost:8002/health"]

interval: 30s

timeout: 10s

retries: 3

logging:

driver: "json-file"

options:

max-size: "10m"

max-file: "3"

labels: "service=nodejs-server,environment=development"

# Nginx Reverse Proxy & Load Balancer

nginx:

image: nginx:alpine

ports:

- "80:80"

- "443:443"

volumes:

- ./nginx/nginx.conf:/etc/nginx/nginx.conf:ro

- ./nginx/ssl:/etc/nginx/ssl:ro

- ./nginx/logs:/var/log/nginx

depends_on:

- frontend

- go-server

- python-server

- nodejs-server

networks:

- app-network

restart: always

healthcheck:

test: ["CMD", "nginx", "-t"]

interval: 30s

timeout: 10s

retries: 3

logging:

driver: "json-file"

options:

max-size: "10m"

max-file: "3"

labels: "service=nginx,environment=development"

# Redis Cache Server

redis:

image: redis:7-alpine

ports:

- "6379:6379"

command: >

redis-server

--appendonly yes

--requirepass redis123

--maxmemory 256mb

--maxmemory-policy allkeys-lru

volumes:

- redis_data:/data

- ./redis/redis.conf:/usr/local/etc/redis/redis.conf

networks:

- app-network

restart: always

healthcheck:

test: ["CMD", "redis-cli", "-a", "redis123", "ping"]

interval: 10s

timeout: 5s

retries: 3

logging:

driver: "json-file"

options:

max-size: "10m"

max-file: "3"

labels: "service=redis,environment=development"

# RedisInsight - Redis Management UI

redisinsight:

image: redis/redisinsight:latest

ports:

- "5540:5540"

environment:

- RITRUSTEDORIGINS=http://localhost:5540

volumes:

- redisinsight_data:/data

depends_on:

- redis

networks:

- app-network

restart: unless-stopped

# PostgreSQL Database

postgres:

image: postgres:15-alpine

ports:

- "5432:5432"

environment:

- POSTGRES_USER=postgres

- POSTGRES_PASSWORD=password123

- POSTGRES_MULTIPLE_DATABASES=goapp,pythonapp,nodeapp

volumes:

- postgres_data:/var/lib/postgresql/data

- ./postgres/init-scripts:/docker-entrypoint-initdb.d

- ./postgres/postgresql.conf:/etc/postgresql/postgresql.conf

networks:

- app-network

restart: always

healthcheck:

test: ["CMD-SHELL", "pg_isready -U postgres"]

interval: 10s

timeout: 5s

retries: 5

logging:

driver: "json-file"

options:

max-size: "10m"

max-file: "3"

labels: "service=postgres,environment=development"

# pgAdmin - PostgreSQL Management UI

pgadmin:

image: dpage/pgadmin4:latest

ports:

- "5050:80"

environment:

- PGADMIN_DEFAULT_EMAIL=admin@example.com

- PGADMIN_DEFAULT_PASSWORD=admin123

- PGADMIN_CONFIG_SERVER_MODE=False

volumes:

- pgadmin_data:/var/lib/pgadmin

depends_on:

- postgres

networks:

- app-network

restart: unless-stopped

# LGTM Stack - Loki (Log Aggregation)

loki:

image: grafana/loki:2.9.0

ports:

- "3100:3100"

command: -config.file=/etc/loki/local-config.yaml

volumes:

- ./lgtm/loki:/etc/loki

- loki_data:/loki

networks:

- app-network

restart: unless-stopped

healthcheck:

test: ["CMD-SHELL", "wget --no-verbose --tries=1 --spider http://localhost:3100/ready || exit 1"]

interval: 30s

timeout: 10s

retries: 3

# LGTM Stack - Tempo (Distributed Tracing)

tempo:

image: grafana/tempo:2.2.0

command: [ "-config.file=/etc/tempo.yaml" ]

volumes:

- ./lgtm/tempo:/etc/tempo.yaml:ro

- tempo_data:/tmp/tempo

ports:

- "3200:3200" # tempo

- "4317:4317" # otlp grpc

- "4318:4318" # otlp http

- "14268:14268" # jaeger ingest

networks:

- app-network

restart: unless-stopped

healthcheck:

test: ["CMD-SHELL", "wget --no-verbose --tries=1 --spider http://localhost:3200/ready || exit 1"]

interval: 30s

timeout: 10s

retries: 3

# LGTM Stack - Mimir (Long-term Metrics Storage)

mimir:

image: grafana/mimir:2.10.0

command: ["-config.file=/etc/mimir.yaml"]

ports:

- "9009:9009"

volumes:

- ./lgtm/mimir:/etc/mimir.yaml:ro

- mimir_data:/data

networks:

- app-network

restart: unless-stopped

healthcheck:

test: ["CMD-SHELL", "wget --no-verbose --tries=1 --spider http://localhost:9009/ready || exit 1"]

interval: 30s

timeout: 10s

retries: 3

# LGTM Stack - Grafana (Visualization & Dashboards)

grafana:

image: grafana/grafana:10.1.0

ports:

- "3001:3000"

environment:

- GF_SECURITY_ADMIN_PASSWORD=admin123

- GF_USERS_ALLOW_SIGN_UP=false

- GF_FEATURE_TOGGLES_ENABLE=traceqlEditor

- GF_INSTALL_PLUGINS=redis-datasource,postgresql-datasource

volumes:

- grafana_data:/var/lib/grafana

- ./lgtm/grafana/provisioning:/etc/grafana/provisioning

- ./lgtm/grafana/dashboards:/var/lib/grafana/dashboards

depends_on:

- loki

- tempo

- mimir

- postgres

networks:

- app-network

restart: unless-stopped

healthcheck:

test: ["CMD-SHELL", "wget --no-verbose --tries=1 --spider http://localhost:3000/api/health || exit 1"]

interval: 30s

timeout: 10s

retries: 3

# Promtail (Log Shipper to Loki)

promtail:

image: grafana/promtail:2.9.0

volumes:

- /var/log:/var/log:ro

- /var/lib/docker/containers:/var/lib/docker/containers:ro

- ./lgtm/promtail:/etc/promtail

command: -config.file=/etc/promtail/config.yml

depends_on:

- loki

networks:

- app-network

restart: unless-stopped

# OTEL Collector (OpenTelemetry Collector)

otel-collector:

image: otel/opentelemetry-collector-contrib:0.87.0

command: ["--config=/etc/otelcol-contrib/otel-collector.yaml"]

volumes:

- ./lgtm/otel-collector:/etc/otelcol-contrib

ports:

- "1888:1888" # pprof extension

- "8888:8888" # Prometheus metrics exposed by the collector

- "8889:8889" # Prometheus exporter metrics

- "13133:13133" # health_check extension

- "4317:4317" # OTLP gRPC receiver

- "4318:4318" # OTLP http receiver

- "55679:55679" # zpages extension

depends_on:

- loki

- tempo

- mimir

networks:

- app-network

restart: unless-stopped

healthcheck:

test: ["CMD", "wget", "--no-verbose", "--tries=1", "--spider", "http://localhost:13133"]

interval: 30s

timeout: 10s

retries: 3

# Named Volumes for Data Persistence

volumes:

postgres_data:

driver: local

redis_data:

driver: local

redisinsight_data:

driver: local

pgadmin_data:

driver: local

loki_data:

driver: local

tempo_data:

driver: local

mimir_data:

driver: local

grafana_data:

driver: local

# Custom Networks

networks:

app-network:

driver: bridge

ipam:

config:

- subnet: 172.20.0.0/16LGTM Stack Microservices Architecture

Components Breakdown

Loki (日誌聚合系統)

是 Grafana Labs 開發的水平可擴展、高可用性的日誌聚合系統。它的設計理念是只索引標籤而不索引日誌內容,這使得它比傳統的 ELK Stack 更輕量且成本更低。在我們的架構中,Loki 收集來自所有微服務的日誌,提供統一的日誌查詢界面。

Grafana (可視化儀表板)

作為統一的可視化平台,它可以連接到 Loki、Tempo 和 Mimir,提供日誌、追蹤和指標的統一視圖。這種整合讓開發者可以在同一個界面中關聯不同類型的可觀測性數據。

Tempo (分散式追蹤)

專門處理分散式追蹤數據,它可以追蹤請求在微服務架構中的完整路徑。當用戶請求經過 Nginx → Go Server → Redis → PostgreSQL 這樣的調用鏈時,Tempo 能夠顯示每個環節的時間消耗和錯誤情況。

Mimir (長期指標存儲)

是 Grafana Labs 推出的 Prometheus 替代方案,提供更好的水平擴展能力和長期存儲功能。它與 Prometheus 完全兼容,但在大規模環境中表現更佳。

Practical Monitoring Workflows

場景一:性能問題排查

當你發現 API 響應時間異常時,你可以:

- 在 Grafana 中查看響應時間指標(來自 Mimir)

- 點擊異常時間點,查看相關的 trace(來自 Tempo)

- 從 trace 中識別瓶頸環節,然後查看相關服務的日誌(來自 Loki)

- 結合 PostgreSQL 和 Redis 的數據源,分析資料庫和快取的性能

場景二:錯誤率監控

你可以設置基於多個數據源的告警:

- 當錯誤率超過閾值時(來自 Mimir 的指標)

- 當特定錯誤關鍵字出現在日誌中時(來自 Loki)

- 當 trace 的錯誤率異常時(來自 Tempo)

Quick Start Commands

# 啟動完整 LGTM Stack 環境

docker-compose up -d

# 只啟動核心應用服務 (不包含監控)

docker-compose up -d frontend go-server python-server nodejs-server nginx redis postgres

# 只啟動監控服務

docker-compose up -d loki tempo mimir grafana promtail otel-collector

# 檢查監控服務健康狀態

docker-compose ps loki tempo mimir grafana

# 查看 OTEL Collector 日誌 (用於除錯追蹤數據)

docker-compose logs -f otel-collector

# 重啟特定監控組件

docker-compose restart grafanaAccess URLs

| Service | URL | Credentials |

|---|---|---|

| Grafana Dashboard | http://localhost:3001 | admin/admin123 |

| Frontend Application | http://localhost:3000 | - |

| Go Server API | http://localhost:8080 | - |

| Python Server API | http://localhost:8001 | - |

| Node.js Server API | http://localhost:8002 | - |

| RedisInsight | http://localhost:5540 | - |

| pgAdmin | http://localhost:5050 | admin@example.com/admin123 |

| Loki API | http://localhost:3100 | - |

| Tempo API | http://localhost:3200 | - |

| Mimir API | http://localhost:9009 | - |